Best Paper Award at NeurIPS Workshop

Our contribution "UMBRELLA: Uncertainty-Aware Model-Based Offline Reinforcement Learning Leveraging Planning" achieved the best paper award at the NeuRIPS 2021 Workshop Machine Learning for Autonomous Driving.

While showing promising results in simulated environments, online reinforcement learning is often still not applicable to safety-critical applications due to the nature of trial and error learning. In the offline reinforcement learning setting, the agent can learn from a previously collected dataset and does not have to interact with the environment.

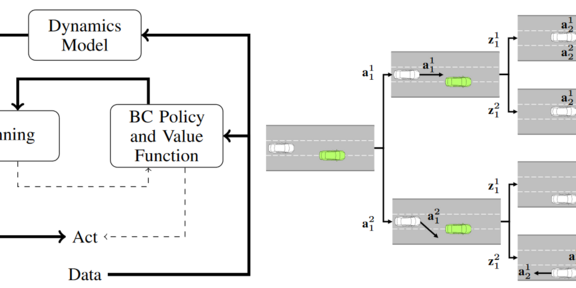

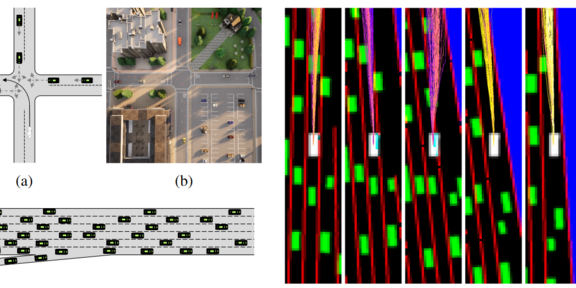

The developed approach solves the prediction planning and control problem of automated in multi-agent environments. It accounts for aleatoric and epistemic uncertainty. The interaction between prediction and planning is modeled by a learned action-conditioned stochastic dynamics model, which predicts the next state based on the previous state, the current action, and a sampled latent variable. A behavior-cloned policy guides the action sampling. By sampling different latent variables, we can predict different future scene evolutions. We roll out multiple future scene evolutions and then choose the optimal action using a stochastic optimization technique. This model-based approach results in high interpretability

Workshop: https://ml4ad.github.io/

Paper: https://arxiv.org/abs/2111.11097

This research was supported by the Federal Ministry for Economic Affairs and Energy on the basis of a decision by the German Bundestag.