AIR - AI Based Imaging Radar Data Processing

Motivation

As the automation of driving functions increases, the relevance of perceiving the environment rises, too. The more accurate and precise this is, the less uncomfortable behavior and potentially short-term replanning of the automated vehicle’s motion is necessary.

Expensive but high-resolution lidar sensors can perceive the environment extremely precisely. However, high costs prevent a broad market entry. High-resolution radar sensors as a possible alternative are only available very recently. In the automotive industry, traditional radar sensors have been known and used for a long time. However, even high-resolution radar sensors do not reach the point cloud density of lidar sensors. Therefore, the AIR project investigates how a high-resolution radar-based perception needs to be adapted to ideally utilize radar-specific characteristics (e.g., Doppler velocity and robustness) and replace lidars as best as possible.

3D Object Detection

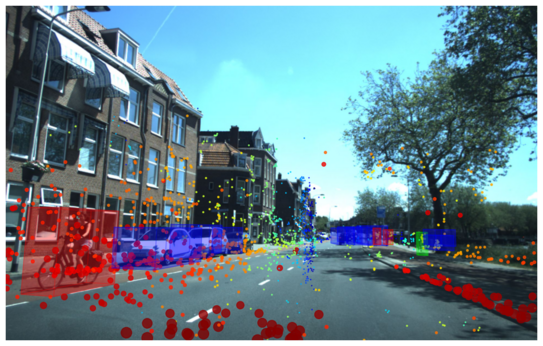

An important field in perception is detecting (dynamic) objects in 3D space. Many different learning-based approaches exist to estimate the position and size from lidar point clouds with high accuracy. The first results indicate that 3D object detection from high-resolution radar point clouds is possible too

However, the (significantly) limited point cloud density of radars compared to lidars remains a great challenge. Therefore, existing 3D object detection approaches are considered as a baseline that should be extended by specific modules or methods to solve issues such as missing or false detections specifically. One key aspect of perception is the integration of the temporal context. First, correct detections in one time frame should be re-identified in the next one and tracked according to their dynamics. Second, it seems sensible to accumulate single frames to artificially augment the data, particularly for sparse radar point clouds. In both cases, the correct integration of dynamic object’s motion is of paramount importance. Radars can directly determine the relative radial velocity by measuring the Doppler shift in a single measurement cycle. The integration of this radar-specific measure and the integration of mono-camera as additional input data will also be investigated in the future course of the project.

Objective

This project’s high-level goal is improving radar-only and combined radar and mono-camera perception. Furthermore, the sensor-specific advantages of the radar sensor should be utilized to enable single or even advanced automated driving features based on the implemented perception, coming as close to a lidar-based system as possible regarding availability, quality, and robustness.

![[Translate to English:] [Translate to English:]](/storages/rst-etit/_processed_/e/8/csm_Technologie_RST_2018_339_a0a62aaa2f.jpg)