Driving Policy Optimization

A safe and comfortable trajectory of an automated vehicle can be described implicitly by a trajectory optimization problem. Unfortunately, the computational complexity of the optimization problem may prevent a real-time application. To reduce hardware requirements, the optimal trajectory can be approximated by an offline learned policy. The policy can be described by a neural network. Unfortunately, the purpose of the parameters within the neural network and their interaction are hard to interpret. Thus, it is desirable to describe the policy by a set of interpretable parameters, which define a set of rules to build the trajectory.

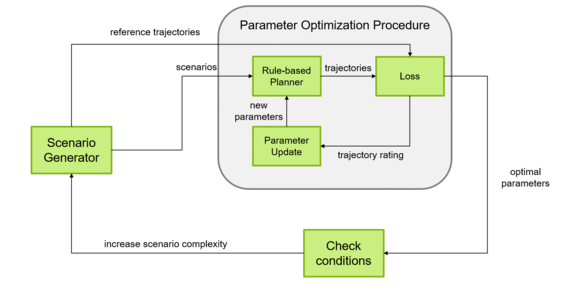

The parameters of the policy shall be chosen, such that it reproduces a desired trajectory. A supervised learning scheme is leveraged to find the optimal parameters. Therefore, several on-road scenarios and optimal example trajectories are generated. Within the optimization procedure, the rule-based policy generates a trajectory for each provided scenario. The deviation of the resulting trajectory from the reference trajectory is rated by a loss function. The parameters of the policy are changed iteratively until the loss converges to the minimal value.

Unfortunately, the rule-based policy might not be able to reproduce the reference trajectory within a demanded accuracy in all scenarios of interest. To find a set of scenarios that allow achieving the demanded accuracy, the complexity of scenarios in the training data set could be gradually increased. If the demanded deviation from the reference cannot be met, the scenario set must be pruned.